10 very practical suggestions for choosing an email archiving solution

What makes a good archiving solution? Count 1 to 10:

1- No vendor lock-in

Archiving email is a long term commitment, you need to think long term and make sure that you will be able, in 10 or 20 years from now, to autonomously, easily and reliably make use of your email archive.

If the data is stored in a proprietary format you will not be able to move it to a different archiving solution, you may not be able to even read it without the software provided by the vendor, who may not be around anymore at that time.

This is why it is important that your emails are stored in an open format, a format that you can read with open tools and without any special knowledge: your current staff may not be around at the time you will need to recover the archive.

In 10 or 20 years from now any proprietary tool may not work anymore on whatever will be a current operating system, if it does you may encounter technical issues for which you need the support of a vendor who does not exist anymore. When it will happen you will probably be in a hurry and will find out that everything is much more complicated, expensive and time consuming than you expected.

Having all the archive in an open and standard format, that you can easily recover without any specialized tool is crucial. The best solution allows you to retrieve the email you are searching for with the bare minimum of the tools: a file manager.

Our email archiver stores plain EML files inside Zip files, one Zip file per day or every 4000 email. The filename of the zipfile clearly tells the archive date so that the minimum tool you need to search and recover email is a filemanager.

Why this choice? The Zip file is a standard format, supported now and tomorrow by a big number of open tools. It provides compression, de-duplication and state-of-the-art encryption (AES256). There is no need to re-invent the wheel, unless you are aiming to lock your customers in a proprietary format.

2- Legal validity

It is important that your stored email can be used as a legal evidence, should you need it. This means being able to legally prove that:

- the email has been received and archived at a specific time

- it has not been modified afterwards

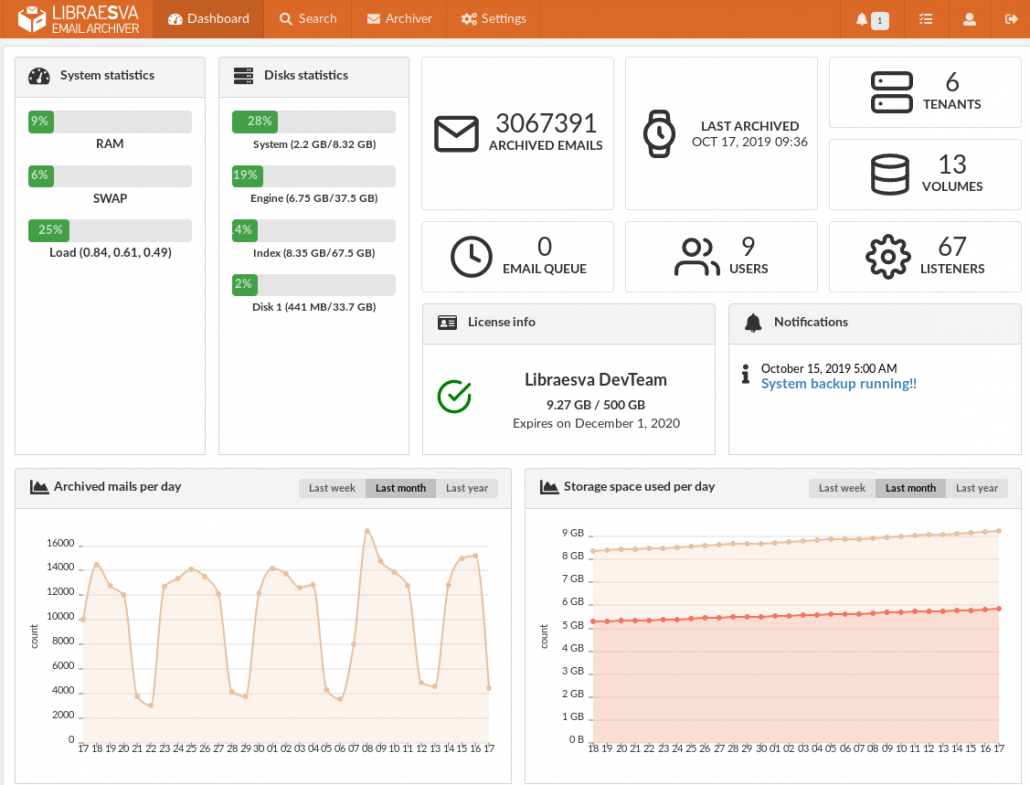

There is a standard, formalized in RFC3161, called “certified time-stamping”. This is an open an documented standard, supported by many open tools that can be used for the verification of authenticity.

Our archiver ships with an embedded certification authority that certifies every single email that is stored. This is done automatically out of the box, no configuration is needed.

Whenever an email is retrieved from the archive, the archiver also automatically verifies its integrity.

Legal value should just be, without any configuration burden, and this is the way we designed it.

3- Multiple copies

Storing all of your stuff in a single place is not wise. This applies to backups and especially to email archiving, which is much more than a set of backups. You must be able to store multiple copies of your archive in multiple locations, automatically and continuously.

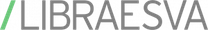

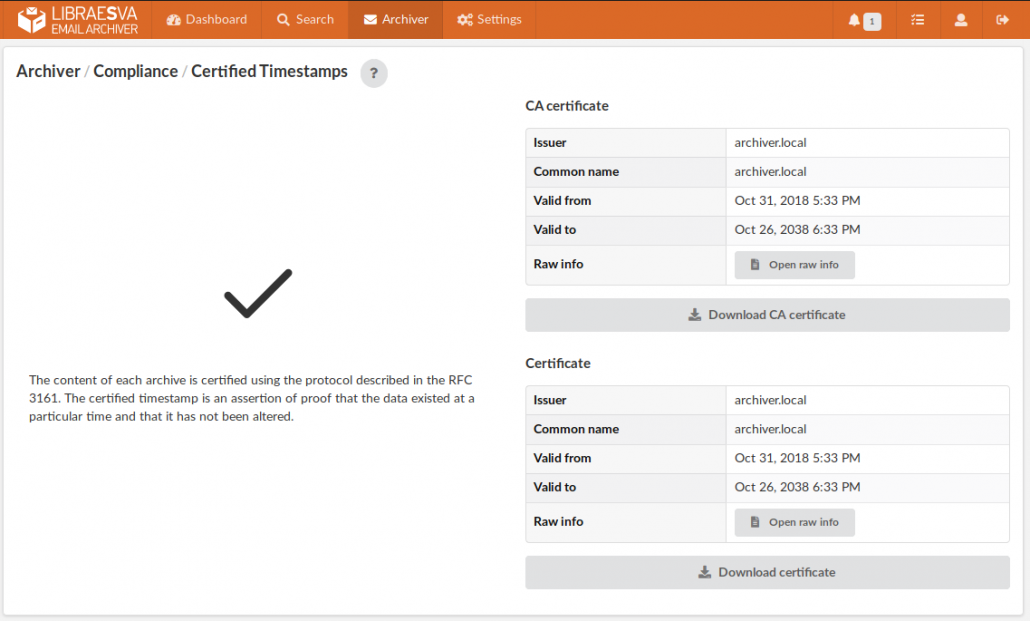

Our archiver supports an unlimited number of volumes of different types: local disks, LAN drives, object storage (supporting virtually all providers and protocols), ftp and sftp.

Email is automatically stored in multiple copies on different volumes which can exist in many different geographical locations (archives are encrypted with AES256). You can also define different retention times for different volumes. For example you can decide that a local volume stores the last 5 years while a remote object storage volume stores the last 20 years worth of email.

4- Usability

Email archiving is not just for compliance, it’s also a tool to improve the productivity of the company. Users should be able to use it for retrieving their own archived email. In order for this to be achieved, the user interaction must be straightforward.

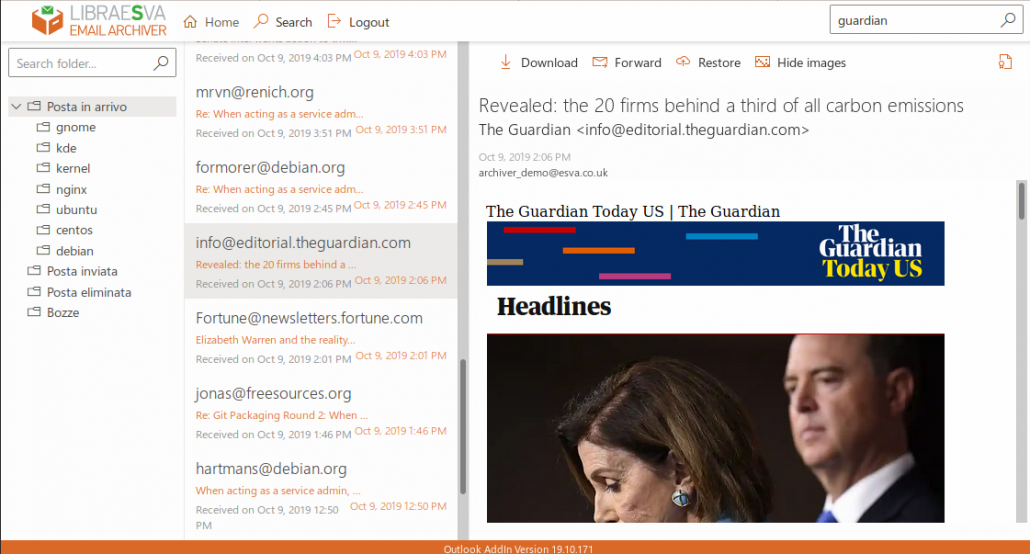

We provide an outlook plugin (it works also in OWA and O365) which can be automatically deployed, an iOS and an Android app, a webapp. Users can not only work with their own email archive: delegation is supported and saved searches can be shared with other users providing an easy way to delegate access to a well specified subset of email.

Slow mail server? The archiver can automatically delete old email from your mailserver, after having verified that it is safely stored in the archive.

The archiver provides a full-text search engine that is much faster than any mailserver. In terms of user interactions the standard definition of what is perceived as “instantaneous” is below 100ms and this is the response time of a standard search query on a big archive of millions of email messages on our archiver.

5- Privacy

Of course you need to make sure that privacy is properly enforced, that email can be seen only by the legit owners and that nobody else, including administrators if that’s your policy, can read it.

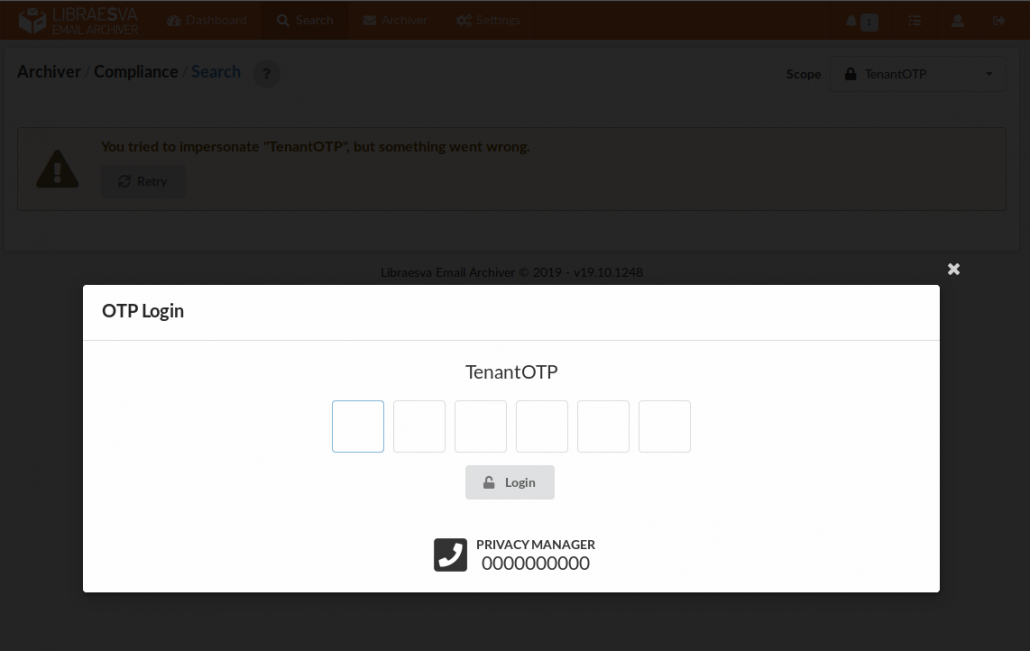

On our archiver a tenant can be protected by a privacy officer, which means that also the administrator must ask for authorization in order to access email contents.

The authorization process is fast and straightforward: time-based OTP (think Google Authenticator). Six digits that can easily be dictated over the phone for a lean but strong privacy enforcement. Once obtained the authorization everything is logged and reported back to the privacy officer.

6- Flexible ingestion

The email archive will follow you over time. The archiver system must be very flexible in terms of ingestion so that you can easily move to different email systems, migrate to or from the cloud, without having to re-think your archiving strategy.

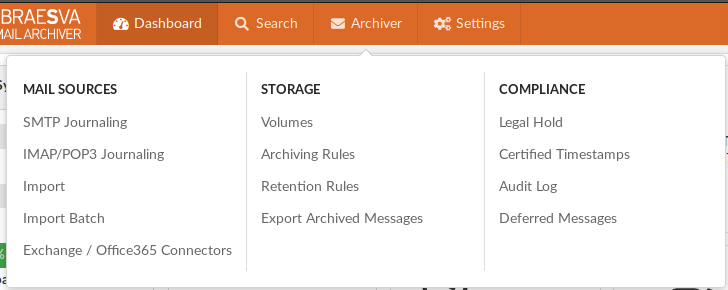

Supported ingestion methods must include SMTP journaling, SMTP forwarding, IMAP, POP3, native O365 and Exchange connectors.

Import of PST or EML archives should be supported as well as exporting to the same formats.

We’ve also implemented batch import for a painless bootstrap: you can provide entire disks full of PSTs or EML archives and they will be imported automatically no matter how big they are.

7- Integration

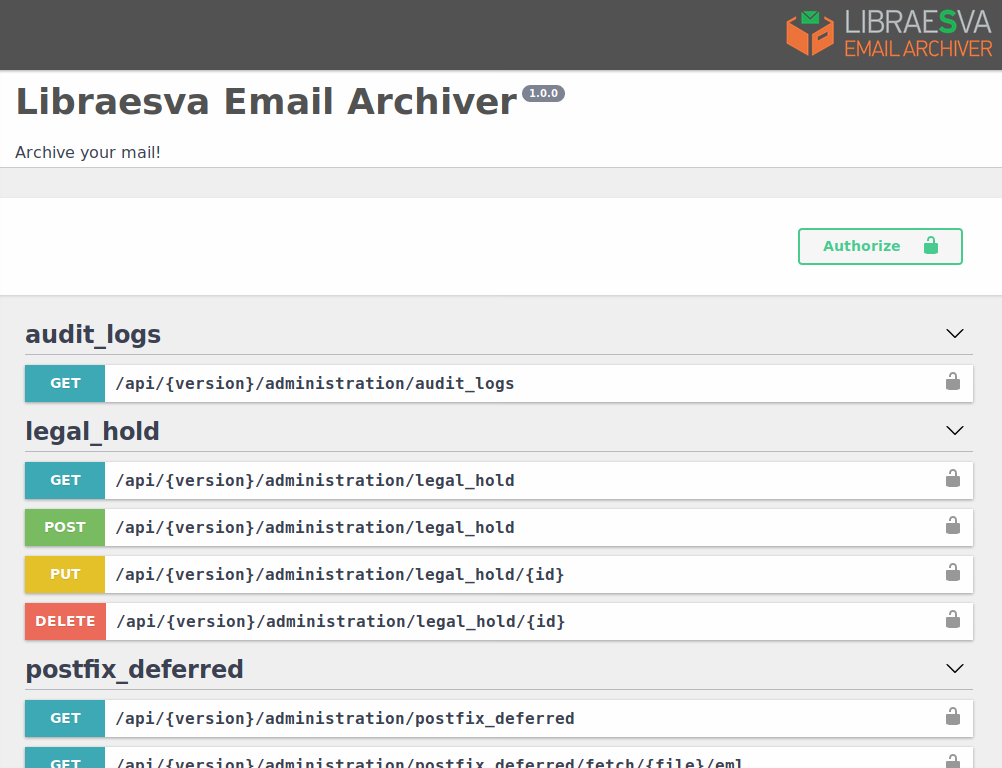

A full API is important when you need to make some integrations with your infrastructure, especially if you are an ISP or an MSP and you want to integrate the email archiving service with your existing web panels.

All the features that our archiver provides are available through a complete REST API. It is so complete that all of our front-ends (web-app, the mobile apps and the outlook plugin) only interact with the archiver through the API, so all functionalities are naturally exposed via API.

You can use the archiver in complete headless mode if you want, you can perform any integration you will ever need.

8- Ease of deployment

Cloud? On-prem? That should all be covered. Who knows how your infrastructure will evolve in 10 or 20 years.

A virtual appliance provides the maximum flexibility: you can run it in the cloud, on premise, you can easily migrate it around and increase resources over time, you have no proprietary hardware to replace, move around or repair.

The virtual app automatically updates itself, new features are automatically installed.

9- Archiving flexibility

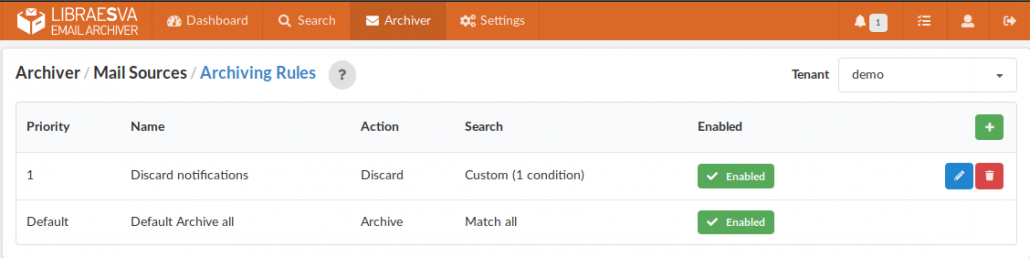

You should be able to choose what you want to archive and what you don’t want to archive. You should be able to choose different retention times for different emails: newsletters? One year may be enough. Lawyer? 10 years minimum. And so on.

Talking about lawyers: legal hold is the capability of locking some email (for example related to a legal case) until a specified date. This is what you need to make sure that absolutely nothing happens to it until the case is over.

Archiving rules, retention rules, legal hold: all of these are covered by our archiver and can be configured with high precision and granularity taking advantage of an advanced graphical query builder.

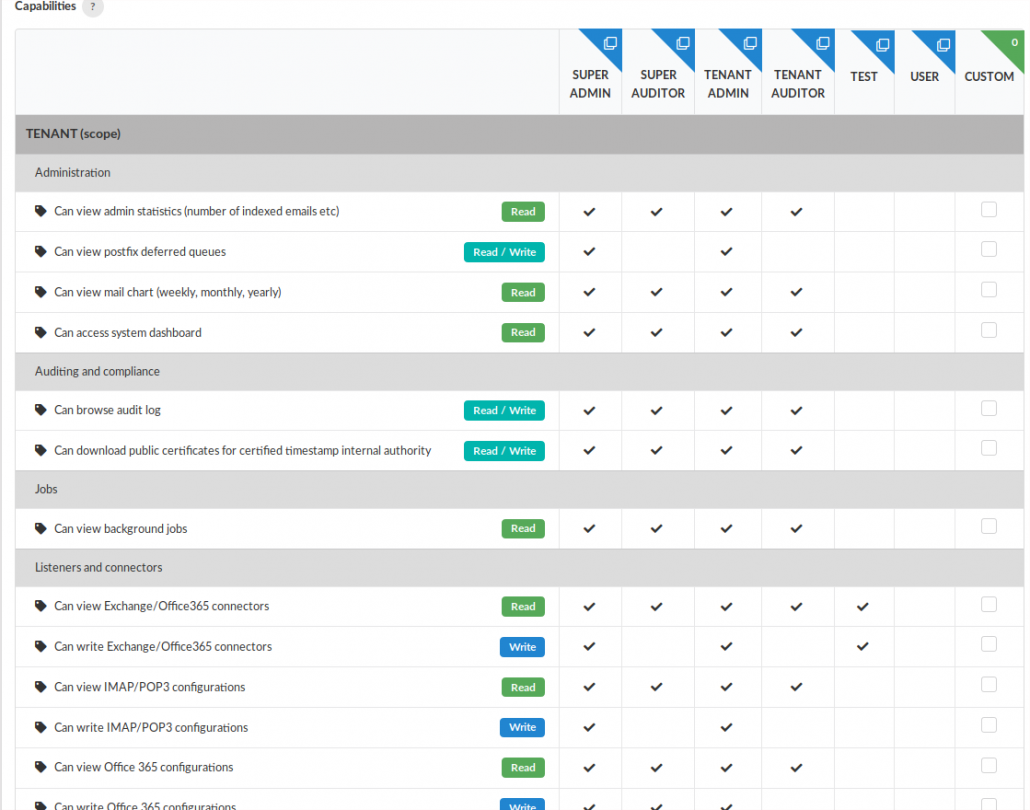

10- Granular permissions

Who can do what? You should get to choose, without any limitation. The permission system must adapt to your current and future policies, not the other way around.

Besides the “standard” roles of admin, auditor and user, on our archiver you can create custom roles in a very granular way. A role is basically a collection of capabilities and there are about 80 different capabilities that you can assign to any role you need.